De-mystifying Cloud Computing: the Pros and Cons of Cloud Services

Cloud computing has been a part of the corporate and consumer lexicon for the past 15 years. Despite this, many organizations and their users are still fuzzy on the finer points of cloud usage and terminology.

De-mystifying the cloud

So what exactly is a cloud computing environment?

The simplest and most straightforward definition is that a cloud is a grid or utility style pay-as-you-go computing model that uses the web to deliver applications and services in real-time.

Organizations can opt to deploy a private cloud infrastructure where they host their services on-premise from behind the safety of the corporate firewall. The advantage here is that the IT department always knows what’s going on with all aspects of the corporate data from bandwidth and CPU utilization to all-important security issues.

Alternatively, organizations can choose a public cloud deployment in which a third party vendor like Amazon Web Services (AWS), Microsoft Azure, Google Cloud, IBM Cloud, Oracle Cloud and other third parties host the services at an off-premises remote location. This scenario saves businesses money and manpower hours by utilizing the host provider’s equipment and management. All that’s needed is a web browser and a high-speed internet connection to connect to the host to access applications, services and data.

However, the public cloud infrastructure is also a shared model in which corporate customers share bandwidth and space on the host’s servers. Enterprises that prioritize privacy and require near impenetrable security and those that require more data control and oversight, typically opt for a private cloud infrastructure in which the hosted services are delivered to the corporation’s end users from behind the safe confines of an internal corporate firewall. However, a private cloud is more than just a hosted services model that exists behind the confines of a firewall. Any discussion of private and/or public cloud infrastructure must also include virtualization. While most virtualized desktop, server, storage and network environments are not yet part of a cloud infrastructure, just about every private and public cloud will feature a virtualized environment.

Organizations contemplating a private cloud also need to ensure that they feature very high (near fault tolerant) availability with at least “five nines” or “six nines – 99.999% or 99.9999% and even true fault tolerant “seven nines” – 99.99999% uptime to ensure uninterrupted operations.

Private clouds should also be able to scale dynamically to accommodate the needs and demands of the users. And unlike most existing, traditional datacenters, the private cloud model should also incorporate a high degree of user-based resource provisioning. Ideally, the IT department should also be able to track resource usage in the private cloud by user, department or groups of users working on specific projects for chargeback purposes. Private clouds will also make extensive use of AI, analytics, business intelligence and business process automation to guarantee that resources are available to the users on demand.

All but the most cash-rich organizations (and there are very few of those) will almost certainly have to upgrade their network infrastructure in advance of migrating to a private cloud environment. Organizations considering outsourcing any of their datacenter needs to a public cloud will also have to perform due diligence to determine the bona fides of their potential cloud service providers.

In 2022 and beyond, a hybrid cloud environment is the most popular model, chosen by over 75% of corporate enterprises. The hybrid cloud theoretically gives businesses the best of both worlds: with some services and applications being hosted on a public cloud while other specific, crucial business applications and services in a private or on-premises cloud behind a firewall.

Types of Cloud Computing Services

There are several types of cloud computing models. They include:

- Software as a Service (SaaS) which utilizes the Internet to deliver software applications to customers. Examples of this are Salesforce.com, which has one of the most popular, widely deployed, and the earliest cloud-based CRM application and Google Apps, which is among the market leaders. Google Apps comes in three editions—Standard, Education and Premier (the first two are free). It provides consumers and corporations with customizable versions of the company’s applications like Google Mail, Google Docs and Calendar.

- Platform as a Service (PaaS) offerings; examples of this include the above-mentioned Amazon Web Services and Microsoft’s top tier Azure Platform. The Microsoft Azure offering contains all the elements of a traditional application stack from the operating system up to the applications and the development framework. It includes the Windows Azure Platform AppFabric (formerly .NET Services for Azure) as well as the SQL Azure Database service. Customers that build applications for Azure will host it in the cloud. However, it is not a multi-tenant architecture meant to host your entire infrastructure. With Azure, businesses rent resources that will reside in Microsoft datacenters. The costs are based on a per usage model. This gives customers the flexibility to rent fewer or more resources depending on their business needs.

- Infrastructure as a Service (IaaS) is exactly what its name implies: the entire infrastructure becomes a multi-tiered hosted cloud model and delivery mechanism. Public, private and hybrid should all be flexible and agile. The resources should be available on demand and should be able to scale up or scale back as business needs dictate.

- Serverless This is a more recent technology innovation. And it can be a bit confusing to the uninitiated. A Serverless cloud is a cloud-native development model that enables cloud developers to build and run applications without having to manage servers. The developers do not manage, provision or maintain the servers when deploying code. The actual code execution is fully managed by the cloud provider, in contrast to the traditional method of writing and developing applications and then deploying them on a servers. To be clear, there are still servers in a serverless model, but they are abstracted away from application development.

Cloud computing—pros and cons

Cloud computing like any technology is not a panacea. It offers both potential benefits as well possible pratfalls. Before beginning any infrastructure upgrade or migration, organizations are well advised to gather all interested parties and stakeholders and construct a business plan that best suits their organization’s needs and budget. When it comes to the cloud, there are no absolutes. Many organizations will have hybrid clouds that include public and private cloud networks. Additionally, many businesses may have multiple cloud hosting providers present in their networks. Whatever your firm’s specific implementation it’s crucial to create a realistic set of goals, a budget and a deployment timetable.

Prior to beginning any technology migration organizations should first perform a thorough inventory and review of their existing legacy infrastructure and make the necessary upgrades, revisions and modifications. All stakeholders within the enterprise should identify the company’s current tactical business goals and map out a two-to-five year cloud infrastructure and services business plan. This should incorporate an annual operational and capital expenditure budget. The migration timetable should include server hardware, server OS and software application interoperability and security vulnerability testing; performance and capacity evaluation and final provisioning and deployment.

Public clouds—advantages and disadvantages

The biggest allure of a public cloud infrastructure over traditional premises-based network infrastructures is the ability to offload the tedious and time consuming management chores to a third party. This in turn can help businesses:

Shave precious capital expenditure monies because they avoid the expensive investment in new equipment including hardware, software and applications as well as the attendant configuration planning and provisioning that accompanies any new technology rollout.

Accelerated deployment timetable. Having an experienced third party cloud services provider do all the work also accelerates the deployment timetable and most likely means less time spent on trial and error.

Construct a flexible, scalable cloud infrastructure that is tailored to their business needs. A company that has performed its due diligence and is working with an experienced cloud provider can architect a cloud infrastructure that will scale up or down according to the organization’s business and technical needs and budget.

Public Cloud Downsides

Shared Tenancy: The potential downside of a public cloud is that the business is essentially “renting” or sharing common virtualized servers and infrastructure tenancy with other customers. This is much like being a tenant in a large apartment building. Depending on the resources of the particular cloud model, there exists the potential for performance, latency and security issues as well as acceptable response, and service and support from the cloud provider.

Risk: Risk is another potential pitfall associated with outsourcing any of your firm’s resources and services to a third party. To mitigate risk and lower it to an acceptable level, it’s essential that organizations choose a reputable, experienced third party cloud services provider very carefully. Ask for customer references. Cloud services providers must work closely and transparently with the corporation to build a cloud infrastructure that best suits the business’ budget, technology and business goals. To ensure that the expectations of both parties are met, organizations should create a checklist of items and issues that are of crucial importance to their business and incorporate them into service level agreements (SLAs). Be as specific as possible. These should include but are not limited to:

- What types of equipment do they use?

- How old is the server hardware? Is the configuration powerful enough?

- How often is the data center equipment/infrastructure upgraded?

- How much bandwidth does the provider have?

- Does the service provider use open standards or is it a proprietary datacenter?

- How many customers will you be sharing data/resources with?

- Where is the cloud services provider’s datacenter physically located?

- What specific guarantees, if any, will it provide for securing sensitive data?

- What level of guaranteed response time will it provide for service and support?

- What is the minimum acceptable latency/response time for its cloud services?

- Will it provide multiple access points to and from the cloud infrastructure?

- What specific provisions will apply to Service Level Agreements (SLAs)?

- How will financial remuneration for SLA violations be determined?

- What are the capacity ceilings for the service infrastructure?

- What provisions will there be for service failures and disruptions?

- How are upgrade and maintenance provisions defined?

- What are the costs over the term of the contract agreement?

- How much will the costs rise over the term of the contract?

- Does the cloud service provider use the Secure Sockets Layer (SSL) and state of the art AES encryption to transmit data?

- Does the cloud services provider encrypt the resting data to prohibit and restrict access?

- How often does the cloud services provider perform audits?

- What mechanisms will it use to quickly shut down a hack, and can it track a hacker?

- If your cloud services provider is located outside your country of origin, what are the privacy and security rules of that country and what impact will that have on your firm’s privacy and security issues?

Finally, the corporation should appoint a liaison who meets regularly with the designated counterpart at the cloud services provider. While a public cloud does provide managed hosting services, that does not mean the company should forget about it as though their data assets really did reside in an amorphous cloud! Regular meetings between the company and its cloud services provider will ensure that the company attains its immediate goals and that it is always aware and working on future technology and business goals. It will also help the corporation to understand usage and capacity issues and ensure that its cloud services provider(s) meets SLAs. Outsourcing any part of your infrastructure to a public cloud does not mean forgetting and abandoning it.

Private clouds—advantages and disadvantages

The biggest advantage of a private cloud infrastructure is that your organization retains control of its corporate assets and can safeguard and preserve its privacy and security. Your organization is in command of its own destiny. That can be a double-edged sword.

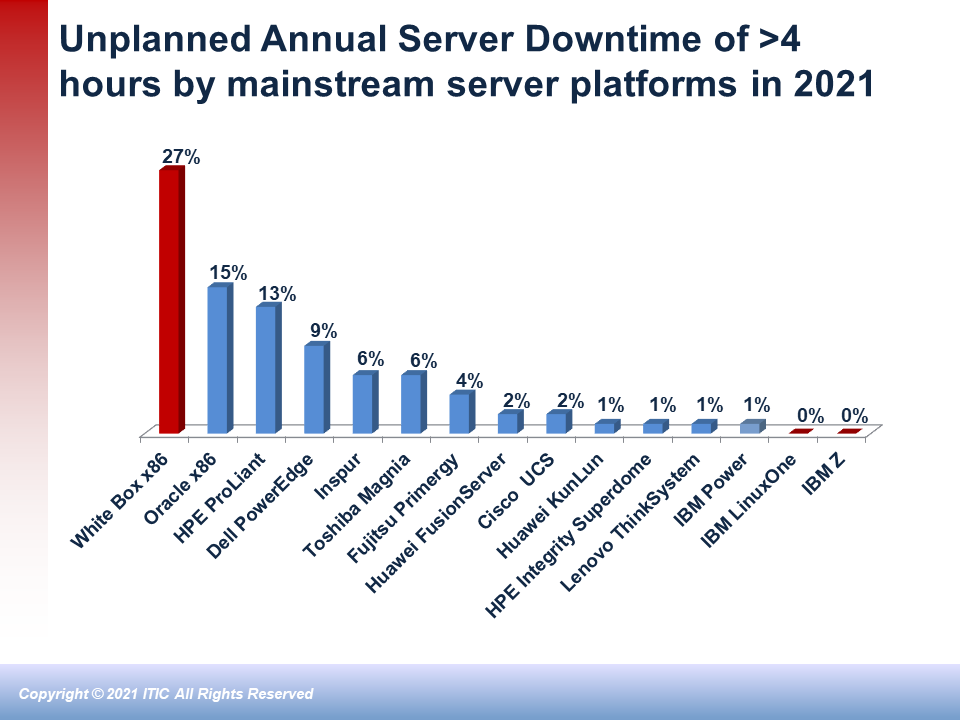

Before committing to build a private cloud model the organization must do a thorough assessment of its current infrastructure, its budget, and the expertise and preparedness of its IT department. Is your firm ready to assume the responsibility for such a large burden from both a technical and ongoing operational standpoint? Only you can answer that. Remember that the private cloud should be highly reliable and highly available—at least 99.999% uptime with built-in redundancy and failover capabilities. Many organizations struggle to attain and maintain 99.99% uptime and reliability which is the equivalent of 8.76 hours of per server, per annum downtime. When your private cloud is down for any length of time, your employees, business partners, customers and suppliers will be unable to access resources.

Private Cloud Downsides

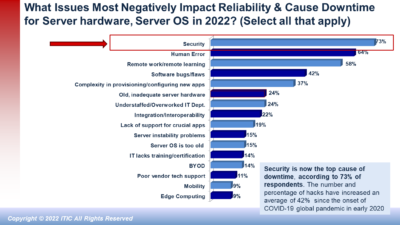

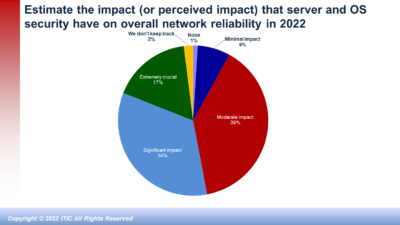

The biggest potential upside of a private cloud is also potentially it’s biggest disadvantage. Namely: that the onus falls entirely on the corporation to achieve the company’s performance, reliability and security goals. To do so, the organization must ensure that its IT administrators and security professionals are up to date on training and certification. To ensure optimal performance, the company must regularly upgrade and rightsize its servers and stay current on all versions of mission critical applications – particularly with respect to licensing, compliance and installing the latest patches and fixes. Security must be a priority! Hackers are professionals. And hacking is big business. The hacks themselves — ransomware, Email phishing scams, CEO fraud etc. are more pervasive and more pernicious. And the cost of hourly downtime is more expensive than ever. ITIC’s latest survey data shows that 91% of midsize and large enterprises estimate that the average cost of a single hour of downtime is $300,000 or more. These statistics are just industry averages. They do not include any additional costs a company may incur due to penalties associated with civil or criminal litigation or compliance penalties. In other words: in a private cloud, the buck stops with the corporation.

Realistically, in order for an organization to successfully implement and maintain a private cloud, it needs the following:

- Robust equipment that can handle the workloads efficiently during peak usage times.

- An experienced, trained IT staff that is familiar with all aspects of virtualization, virtualization management, grid, utility and chargeback computing models.

- An adequate capital expenditure and operational expenditure budget.

- The right set of private cloud product offerings and service agreements.

- Appropriate third party virtualization and management tools to support the private cloud.

- Specific SLA agreements with vendors, suppliers and business partners.

- Operational level agreements (OLAs) to ensure that each person in the organization is responsible for specific routine tasks and in the event of an outage.

- A disaster recovery and backup strategy.

- Strong security products and policies.

- Efficient chargeback utilities, policies and procedures.

Other potential private cloud pitfalls include; deciding which applications to virtualize, vendor lock-in and integration, and interoperability issues. Businesses grapple with these same issues today in their existing environments.

Conclusions

Hybrid, public and private cloud infrastructure deployments will continue to experience double digit growth for the foreseeable future. The benefits of cloud computing will vary according to individual organization’s implementation. Preparedness and prior to deployment are crucial. Cloud vendors are responsible for maintaining performance, reliability and security. However, corporate enterprises cannot simply cede total responsibility to their vendor partners because the data assets are housed off-premises. Businesses must continue to perform their due diligence. All appropriate corporate enterprise stakeholder must regularly review and monitor performance and capacity; security; compliance and SLA results – preferably on a quarterly or semi-annual basis. This will ensure your organization achieves the optimal business and technical benefits. Keeping a watchful eye on security is imperative. Cloud vendors and businesses must work in concert as true business partners to achieve optimal TCO and ROI and mitigate risk.

De-mystifying Cloud Computing: the Pros and Cons of Cloud Services Read More »